搜索到

58

篇与

的结果

-

一键安装docker脚本 #!/bin/bash # 脚本参数提示 function help_info(){ echo -e "\033[32m\t\t\t请在脚本后面输入参数\t\t\t\t\033[0m" echo -e "\033[32m+-----------------------+---------------------------------------+\033[0m" echo -e "\033[32m|\t参数\t\t|\t\t详解\t\t\t|\033[0m" echo -e "\033[32m+-----------------------+---------------------------------------+\033[0m" echo -e "\033[32m|\toffline\t\t|\t离线安装\t\t\t|\033[0m" echo -e "\033[32m|\tonline\t\t|\t在线安装(需要机器访问外网)\t|\033[0m" echo -e "\033[32m|\tremove\t\t|\t清理环境\t\t\t|\033[0m" echo -e "\033[32m|\thelp\t\t|\t帮助信息\t\t\t|\033[0m" echo -e "\033[32m|\tuninstall\t|\t卸载docker\t\t\t|\033[0m" echo -e "\033[32m+-----------------------+---------------------------------------+\033[0m" } # 安装其他docker版本 function download_other_version(){ cd /root/docker_install_pkg/ # 若第2个参数不做自定义版本要求,则默认使用24.0.2版本安装包 docker_version=${2:-24.0.2} while true; do wget https://download.docker.com/linux/static/stable/x86_64/docker-$docker_version.tgz &> /dev/null if [ $? -eq 0 ]; then echo -e "\033[32m下载Docker离线安装包为:docker-$docker_version.tgz,包存放路径为:/root/docker_install_pkg/\033[0m" break fi done # wget https://download.docker.com/linux/static/stable/x86_64/docker-$docker_version.tgz >> /dev/null # echo -e "\033[32m下载Docker离线安装包为:docker-$docker_version.tgz,包存放路径为:/root/docker_install_pkg/\033[0m" } # function find_pkg(){ cp -a $(find / -name 'docker-24.0.2.tgz' | grep -i docker) /root/docker_install_pkg/ } # 离线安装配置 function install_service(){ cd /root/docker_install_pkg/ tar -zxf docker*.tgz cp -p docker/* /usr/bin/ cat > /usr/lib/systemd/system/docker.service << EOF [Unit] Description=Docker Application Container Engine Documentation=http://docs.docker.com After=network.target docker.socket [Service] Type=notify EnvironmentFile=-/run/flannel/docker WorkingDirectory=/usr/local/bin ExecStart=/usr/bin/dockerd \ -H tcp://0.0.0.0:4243 \ -H unix:///var/run/docker.sock \ --selinux-enabled=false \ --log-opt max-size=1g ExecReload=/bin/kill -s HUP $MAINPID # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Uncomment TasksMax if your systemd version supports it. # Only systemd 226 and above support this version. #TasksMax=infinity TimeoutStartSec=0 # set delegate yes so that systemd does not reset the cgroups of docker containers Delegate=yes # kill only the docker process, not all processes in the cgroup KillMode=process Restart=on-failure [Install] WantedBy=multi-user.target EOF mkdir -p /etc/docker cat > /etc/docker/daemon.json << EOF { "registry-mirrors": [ "https://2a6bf1988cb6428c877f723ec7530dbc.mirror.swr.myhuaweicloud.com", "https://docker.m.daocloud.io", "https://hub-mirror.c.163.com", "https://mirror.baidubce.com", "https://your_preferred_mirror", "https://dockerhub.icu", "https://docker.registry.cyou", "https://docker-cf.registry.cyou", "https://dockercf.jsdelivr.fyi", "https://docker.jsdelivr.fyi", "https://dockertest.jsdelivr.fyi", "https://mirror.aliyuncs.com", "https://dockerproxy.com", "https://mirror.baidubce.com", "https://docker.m.daocloud.io", "https://docker.nju.edu.cn", "https://docker.mirrors.sjtug.sjtu.edu.cn", "https://docker.mirrors.ustc.edu.cn", "https://mirror.iscas.ac.cn", "https://docker.rainbond.cc" ] } EOF systemctl daemon-reload systemctl start docker systemctl enable docker >> /dev/null echo -e "\033[32mDocker服务启动完成,并设置为开机自启!\033[0m" } function service_alive(){ systemctl is-active docker >> /dev/null if [ $? -eq 0 ];then echo -e "\033[31mDocker已经存在,无需安装!\033[0m" fi } #卸载docker function uninstall_docker(){ docker stop $(docker ps -aq) &> /dev/null docker rm $(docker ps -aq) &> /dev/null docker rmi $(docker images -q) &> /dev/null systemctl stop docker rm -rf /etc/systemd/system/docker.service /usr/bin/containerd /usr/bin/containerd-shim /usr/bin/ctr /usr/bin/runc /usr/bin/docker* /etc/docker/ /var/lib/docker echo -e "\033[32mDocker卸载完成!\033[0m" } # 安装执行 if [ `whoami` = 'root' ];then # 安装目录 mkdir -pv /root/docker_install_pkg/ >> /dev/null case $1 in offline ) service_alive # 离线安装 find_pkg && install_service ;; online ) service_alive # 在线安装 download_other_version && install_service ;; remove ) # 清理环境 if [ -d /root/docker_install_pkg/ ];then rm -r /root/docker_install_pkg/ echo -e "\033[32m环境清理完成!\033[0m" else echo -e "\033[31m环境清理完成,无需重复执行!\033[0m" fi ;; uninstall ) # 卸载docker uninstall_docker ;; * | help ) # 帮助 help_info ;; esac else echo -e "\033[31m当前执行用户为非root用户,推荐使用root用户执行安装操作!\033[0m" fi

一键安装docker脚本 #!/bin/bash # 脚本参数提示 function help_info(){ echo -e "\033[32m\t\t\t请在脚本后面输入参数\t\t\t\t\033[0m" echo -e "\033[32m+-----------------------+---------------------------------------+\033[0m" echo -e "\033[32m|\t参数\t\t|\t\t详解\t\t\t|\033[0m" echo -e "\033[32m+-----------------------+---------------------------------------+\033[0m" echo -e "\033[32m|\toffline\t\t|\t离线安装\t\t\t|\033[0m" echo -e "\033[32m|\tonline\t\t|\t在线安装(需要机器访问外网)\t|\033[0m" echo -e "\033[32m|\tremove\t\t|\t清理环境\t\t\t|\033[0m" echo -e "\033[32m|\thelp\t\t|\t帮助信息\t\t\t|\033[0m" echo -e "\033[32m|\tuninstall\t|\t卸载docker\t\t\t|\033[0m" echo -e "\033[32m+-----------------------+---------------------------------------+\033[0m" } # 安装其他docker版本 function download_other_version(){ cd /root/docker_install_pkg/ # 若第2个参数不做自定义版本要求,则默认使用24.0.2版本安装包 docker_version=${2:-24.0.2} while true; do wget https://download.docker.com/linux/static/stable/x86_64/docker-$docker_version.tgz &> /dev/null if [ $? -eq 0 ]; then echo -e "\033[32m下载Docker离线安装包为:docker-$docker_version.tgz,包存放路径为:/root/docker_install_pkg/\033[0m" break fi done # wget https://download.docker.com/linux/static/stable/x86_64/docker-$docker_version.tgz >> /dev/null # echo -e "\033[32m下载Docker离线安装包为:docker-$docker_version.tgz,包存放路径为:/root/docker_install_pkg/\033[0m" } # function find_pkg(){ cp -a $(find / -name 'docker-24.0.2.tgz' | grep -i docker) /root/docker_install_pkg/ } # 离线安装配置 function install_service(){ cd /root/docker_install_pkg/ tar -zxf docker*.tgz cp -p docker/* /usr/bin/ cat > /usr/lib/systemd/system/docker.service << EOF [Unit] Description=Docker Application Container Engine Documentation=http://docs.docker.com After=network.target docker.socket [Service] Type=notify EnvironmentFile=-/run/flannel/docker WorkingDirectory=/usr/local/bin ExecStart=/usr/bin/dockerd \ -H tcp://0.0.0.0:4243 \ -H unix:///var/run/docker.sock \ --selinux-enabled=false \ --log-opt max-size=1g ExecReload=/bin/kill -s HUP $MAINPID # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Uncomment TasksMax if your systemd version supports it. # Only systemd 226 and above support this version. #TasksMax=infinity TimeoutStartSec=0 # set delegate yes so that systemd does not reset the cgroups of docker containers Delegate=yes # kill only the docker process, not all processes in the cgroup KillMode=process Restart=on-failure [Install] WantedBy=multi-user.target EOF mkdir -p /etc/docker cat > /etc/docker/daemon.json << EOF { "registry-mirrors": [ "https://2a6bf1988cb6428c877f723ec7530dbc.mirror.swr.myhuaweicloud.com", "https://docker.m.daocloud.io", "https://hub-mirror.c.163.com", "https://mirror.baidubce.com", "https://your_preferred_mirror", "https://dockerhub.icu", "https://docker.registry.cyou", "https://docker-cf.registry.cyou", "https://dockercf.jsdelivr.fyi", "https://docker.jsdelivr.fyi", "https://dockertest.jsdelivr.fyi", "https://mirror.aliyuncs.com", "https://dockerproxy.com", "https://mirror.baidubce.com", "https://docker.m.daocloud.io", "https://docker.nju.edu.cn", "https://docker.mirrors.sjtug.sjtu.edu.cn", "https://docker.mirrors.ustc.edu.cn", "https://mirror.iscas.ac.cn", "https://docker.rainbond.cc" ] } EOF systemctl daemon-reload systemctl start docker systemctl enable docker >> /dev/null echo -e "\033[32mDocker服务启动完成,并设置为开机自启!\033[0m" } function service_alive(){ systemctl is-active docker >> /dev/null if [ $? -eq 0 ];then echo -e "\033[31mDocker已经存在,无需安装!\033[0m" fi } #卸载docker function uninstall_docker(){ docker stop $(docker ps -aq) &> /dev/null docker rm $(docker ps -aq) &> /dev/null docker rmi $(docker images -q) &> /dev/null systemctl stop docker rm -rf /etc/systemd/system/docker.service /usr/bin/containerd /usr/bin/containerd-shim /usr/bin/ctr /usr/bin/runc /usr/bin/docker* /etc/docker/ /var/lib/docker echo -e "\033[32mDocker卸载完成!\033[0m" } # 安装执行 if [ `whoami` = 'root' ];then # 安装目录 mkdir -pv /root/docker_install_pkg/ >> /dev/null case $1 in offline ) service_alive # 离线安装 find_pkg && install_service ;; online ) service_alive # 在线安装 download_other_version && install_service ;; remove ) # 清理环境 if [ -d /root/docker_install_pkg/ ];then rm -r /root/docker_install_pkg/ echo -e "\033[32m环境清理完成!\033[0m" else echo -e "\033[31m环境清理完成,无需重复执行!\033[0m" fi ;; uninstall ) # 卸载docker uninstall_docker ;; * | help ) # 帮助 help_info ;; esac else echo -e "\033[31m当前执行用户为非root用户,推荐使用root用户执行安装操作!\033[0m" fi -

小飞机 已经用了很长时间了,也对比了多家,综合来看这家是性价比最高的,同时支持签到来延长使用周期,当然如果需要自动签到方法的可加群沟通。 平时翻阅文献和github下载、刷YT等速度刷刷的,总体来说挺稳定的支持多端同时在线,后续会持续使用。备注:要理性网上冲浪,合理合规浏览所需内容,切勿转发和讨论与IT技术无关的事情~推荐注册入口(以下三种方式任选)通过 https://glados.space/landing/2DF9O-3C3QB-766FS-YTHFS 注册, 自动填写激活通过 https://2df9o-3c3qb-766fs-ythfs.glados.space , 自动填写激活直接注册GLaDOS(注册地址在 https://github.com/glados-network/GLaDOS 实时更新)成功后输入邀请码:2DF9O-3C3QB-766FS-YTHFS 激活

小飞机 已经用了很长时间了,也对比了多家,综合来看这家是性价比最高的,同时支持签到来延长使用周期,当然如果需要自动签到方法的可加群沟通。 平时翻阅文献和github下载、刷YT等速度刷刷的,总体来说挺稳定的支持多端同时在线,后续会持续使用。备注:要理性网上冲浪,合理合规浏览所需内容,切勿转发和讨论与IT技术无关的事情~推荐注册入口(以下三种方式任选)通过 https://glados.space/landing/2DF9O-3C3QB-766FS-YTHFS 注册, 自动填写激活通过 https://2df9o-3c3qb-766fs-ythfs.glados.space , 自动填写激活直接注册GLaDOS(注册地址在 https://github.com/glados-network/GLaDOS 实时更新)成功后输入邀请码:2DF9O-3C3QB-766FS-YTHFS 激活 -

k8s 1.28高可用搭建containerd集群08 1 containerd 部署1.1 Containerd安装及配置获取软件包wget https://github.com/containerd/containerd/releases/download/v1.7.9/cri-containerd-cni-1.7.9-linux-amd64.tar.gz 1.2 安装containerdtar -xf cri-containerd-cni-1.7.9-linux-amd64.tar.gz -C / 默认解压后会有如下目录: etc opt usr 会把对应的目解压到/下对应目录中,这样就省去复制文件步骤。 1.3 生成配置文件并修改mkdir /etc/containerd containerd config default >/etc/containerd/config.toml # ls /etc/containerd/ config.toml 下面的配置文件中已修改,可不执行,仅修改默认时执行。 sed -i 's@systemd_cgroup = false@systemd_cgroup = true@' /etc/containerd/config.toml 下面的配置文件中已修改,可不执行,仅修改默认时执行。 sed -i 's@registry.k8s.io/pause:3.8@registry.aliyuncs.com/google_containers/pause:3.9@' /etc/containerd/config.toml # [root@k8s-node02 k8s-work]# cat /etc/containerd/config.toml disabled_plugins = [] imports = [] oom_score = 0 plugin_dir = "" required_plugins = [] root = "/var/lib/containerd" state = "/run/containerd" temp = "" version = 2 [cgroup] path = "" [debug] address = "" format = "" gid = 0 level = "" uid = 0 [grpc] address = "/run/containerd/containerd.sock" gid = 0 max_recv_message_size = 16777216 max_send_message_size = 16777216 tcp_address = "" tcp_tls_ca = "" tcp_tls_cert = "" tcp_tls_key = "" uid = 0 [metrics] address = "" grpc_histogram = false [plugins] [plugins."io.containerd.gc.v1.scheduler"] deletion_threshold = 0 mutation_threshold = 100 pause_threshold = 0.02 schedule_delay = "0s" startup_delay = "100ms" [plugins."io.containerd.grpc.v1.cri"] cdi_spec_dirs = ["/etc/cdi", "/var/run/cdi"] device_ownership_from_security_context = false disable_apparmor = false disable_cgroup = false disable_hugetlb_controller = true disable_proc_mount = false disable_tcp_service = true drain_exec_sync_io_timeout = "0s" enable_cdi = false enable_selinux = false enable_tls_streaming = false enable_unprivileged_icmp = false enable_unprivileged_ports = false ignore_image_defined_volumes = false image_pull_progress_timeout = "1m0s" max_concurrent_downloads = 3 max_container_log_line_size = 16384 netns_mounts_under_state_dir = false restrict_oom_score_adj = false sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9" selinux_category_range = 1024 stats_collect_period = 10 stream_idle_timeout = "4h0m0s" stream_server_address = "127.0.0.1" stream_server_port = "0" systemd_cgroup = true tolerate_missing_hugetlb_controller = true unset_seccomp_profile = "" [plugins."io.containerd.grpc.v1.cri".cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d" conf_template = "" ip_pref = "" max_conf_num = 1 setup_serially = false [plugins."io.containerd.grpc.v1.cri".containerd] default_runtime_name = "runc" disable_snapshot_annotations = true discard_unpacked_layers = false ignore_blockio_not_enabled_errors = false ignore_rdt_not_enabled_errors = false no_pivot = false snapshotter = "overlayfs" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false privileged_without_host_devices_all_devices_allowed = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" sandbox_mode = "" snapshotter = "" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false privileged_without_host_devices_all_devices_allowed = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "io.containerd.runc.v2" sandbox_mode = "podsandbox" snapshotter = "" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] BinaryName = "" CriuImagePath = "" CriuPath = "" CriuWorkPath = "" IoGid = 0 IoUid = 0 NoNewKeyring = false NoPivotRoot = false Root = "" ShimCgroup = "" SystemdCgroup = false [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false privileged_without_host_devices_all_devices_allowed = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" sandbox_mode = "" snapshotter = "" [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options] [plugins."io.containerd.grpc.v1.cri".image_decryption] key_model = "node" [plugins."io.containerd.grpc.v1.cri".registry] config_path = "" [plugins."io.containerd.grpc.v1.cri".registry.auths] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.headers] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming] tls_cert_file = "" tls_key_file = "" [plugins."io.containerd.internal.v1.opt"] path = "/opt/containerd" [plugins."io.containerd.internal.v1.restart"] interval = "10s" [plugins."io.containerd.internal.v1.tracing"] sampling_ratio = 1.0 service_name = "containerd" [plugins."io.containerd.metadata.v1.bolt"] content_sharing_policy = "shared" [plugins."io.containerd.monitor.v1.cgroups"] no_prometheus = false [plugins."io.containerd.nri.v1.nri"] disable = true disable_connections = false plugin_config_path = "/etc/nri/conf.d" plugin_path = "/opt/nri/plugins" plugin_registration_timeout = "5s" plugin_request_timeout = "2s" socket_path = "/var/run/nri/nri.sock" [plugins."io.containerd.runtime.v1.linux"] no_shim = false runtime = "runc" runtime_root = "" shim = "containerd-shim" shim_debug = false [plugins."io.containerd.runtime.v2.task"] platforms = ["linux/amd64"] sched_core = false [plugins."io.containerd.service.v1.diff-service"] default = ["walking"] [plugins."io.containerd.service.v1.tasks-service"] blockio_config_file = "" rdt_config_file = "" [plugins."io.containerd.snapshotter.v1.aufs"] root_path = "" [plugins."io.containerd.snapshotter.v1.blockfile"] fs_type = "" mount_options = [] root_path = "" scratch_file = "" [plugins."io.containerd.snapshotter.v1.btrfs"] root_path = "" [plugins."io.containerd.snapshotter.v1.devmapper"] async_remove = false base_image_size = "" discard_blocks = false fs_options = "" fs_type = "" pool_name = "" root_path = "" [plugins."io.containerd.snapshotter.v1.native"] root_path = "" [plugins."io.containerd.snapshotter.v1.overlayfs"] mount_options = [] root_path = "" sync_remove = false upperdir_label = false [plugins."io.containerd.snapshotter.v1.zfs"] root_path = "" [plugins."io.containerd.tracing.processor.v1.otlp"] endpoint = "" insecure = false protocol = "" [plugins."io.containerd.transfer.v1.local"] config_path = "" max_concurrent_downloads = 3 max_concurrent_uploaded_layers = 3 [[plugins."io.containerd.transfer.v1.local".unpack_config]] differ = "" platform = "linux/amd64" snapshotter = "overlayfs" [proxy_plugins] [stream_processors] [stream_processors."io.containerd.ocicrypt.decoder.v1.tar"] accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar" [stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"] accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar+gzip" [timeouts] "io.containerd.timeout.bolt.open" = "0s" "io.containerd.timeout.metrics.shimstats" = "2s" "io.containerd.timeout.shim.cleanup" = "5s" "io.containerd.timeout.shim.load" = "5s" "io.containerd.timeout.shim.shutdown" = "3s" "io.containerd.timeout.task.state" = "2s" [ttrpc] address = "" gid = 0 uid = 0 2 下载libseccomp-2.5.1版本的软件包wget http://rpmfind.net/linux/centos/8-stream/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm 2.1 安装libseccomp-2.5.1软件包#卸载原来的 rpm -qa | grep libseccomp libseccomp-devel-2.3.1-4.el7.x86_64 libseccomp-2.3.1-4.el7.x86_64 rpm -e libseccomp-devel-2.3.1-4.el7.x86_64 --nodeps rpm -e libseccomp-2.3.1-4.el7.x86_64 --nodeps rpm -ivh libseccomp-2.5.1-1.el8.x86_64.rpm 2.2 检查安装的版本,安装成功啦rpm -qa | grep libseccomp 2.3 安装runc由于上述软件包中包含的runc对系统依赖过多,所以建议单独下载安装。默认runc执行时提示:runc: symbol lookup error: runc: undefined symbol: seccomp_notify_respondwget https://github.com/opencontainers/runc/releases/download/v1.1.10/runc.amd64 chmod +x runc.amd64 替换掉原软件包中的runc mv runc.amd64 /usr/local/sbin/runc # runc -v VERSION: 1.1.10 commit: v1.1.10-0-g18a0cb0f spec: 1.0.2-dev go: go1.20.10 libseccomp: 2.5.1 systemctl enable --now containerd systemctl status containerd

k8s 1.28高可用搭建containerd集群08 1 containerd 部署1.1 Containerd安装及配置获取软件包wget https://github.com/containerd/containerd/releases/download/v1.7.9/cri-containerd-cni-1.7.9-linux-amd64.tar.gz 1.2 安装containerdtar -xf cri-containerd-cni-1.7.9-linux-amd64.tar.gz -C / 默认解压后会有如下目录: etc opt usr 会把对应的目解压到/下对应目录中,这样就省去复制文件步骤。 1.3 生成配置文件并修改mkdir /etc/containerd containerd config default >/etc/containerd/config.toml # ls /etc/containerd/ config.toml 下面的配置文件中已修改,可不执行,仅修改默认时执行。 sed -i 's@systemd_cgroup = false@systemd_cgroup = true@' /etc/containerd/config.toml 下面的配置文件中已修改,可不执行,仅修改默认时执行。 sed -i 's@registry.k8s.io/pause:3.8@registry.aliyuncs.com/google_containers/pause:3.9@' /etc/containerd/config.toml # [root@k8s-node02 k8s-work]# cat /etc/containerd/config.toml disabled_plugins = [] imports = [] oom_score = 0 plugin_dir = "" required_plugins = [] root = "/var/lib/containerd" state = "/run/containerd" temp = "" version = 2 [cgroup] path = "" [debug] address = "" format = "" gid = 0 level = "" uid = 0 [grpc] address = "/run/containerd/containerd.sock" gid = 0 max_recv_message_size = 16777216 max_send_message_size = 16777216 tcp_address = "" tcp_tls_ca = "" tcp_tls_cert = "" tcp_tls_key = "" uid = 0 [metrics] address = "" grpc_histogram = false [plugins] [plugins."io.containerd.gc.v1.scheduler"] deletion_threshold = 0 mutation_threshold = 100 pause_threshold = 0.02 schedule_delay = "0s" startup_delay = "100ms" [plugins."io.containerd.grpc.v1.cri"] cdi_spec_dirs = ["/etc/cdi", "/var/run/cdi"] device_ownership_from_security_context = false disable_apparmor = false disable_cgroup = false disable_hugetlb_controller = true disable_proc_mount = false disable_tcp_service = true drain_exec_sync_io_timeout = "0s" enable_cdi = false enable_selinux = false enable_tls_streaming = false enable_unprivileged_icmp = false enable_unprivileged_ports = false ignore_image_defined_volumes = false image_pull_progress_timeout = "1m0s" max_concurrent_downloads = 3 max_container_log_line_size = 16384 netns_mounts_under_state_dir = false restrict_oom_score_adj = false sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9" selinux_category_range = 1024 stats_collect_period = 10 stream_idle_timeout = "4h0m0s" stream_server_address = "127.0.0.1" stream_server_port = "0" systemd_cgroup = true tolerate_missing_hugetlb_controller = true unset_seccomp_profile = "" [plugins."io.containerd.grpc.v1.cri".cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d" conf_template = "" ip_pref = "" max_conf_num = 1 setup_serially = false [plugins."io.containerd.grpc.v1.cri".containerd] default_runtime_name = "runc" disable_snapshot_annotations = true discard_unpacked_layers = false ignore_blockio_not_enabled_errors = false ignore_rdt_not_enabled_errors = false no_pivot = false snapshotter = "overlayfs" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false privileged_without_host_devices_all_devices_allowed = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" sandbox_mode = "" snapshotter = "" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false privileged_without_host_devices_all_devices_allowed = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "io.containerd.runc.v2" sandbox_mode = "podsandbox" snapshotter = "" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] BinaryName = "" CriuImagePath = "" CriuPath = "" CriuWorkPath = "" IoGid = 0 IoUid = 0 NoNewKeyring = false NoPivotRoot = false Root = "" ShimCgroup = "" SystemdCgroup = false [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false privileged_without_host_devices_all_devices_allowed = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" sandbox_mode = "" snapshotter = "" [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options] [plugins."io.containerd.grpc.v1.cri".image_decryption] key_model = "node" [plugins."io.containerd.grpc.v1.cri".registry] config_path = "" [plugins."io.containerd.grpc.v1.cri".registry.auths] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.headers] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming] tls_cert_file = "" tls_key_file = "" [plugins."io.containerd.internal.v1.opt"] path = "/opt/containerd" [plugins."io.containerd.internal.v1.restart"] interval = "10s" [plugins."io.containerd.internal.v1.tracing"] sampling_ratio = 1.0 service_name = "containerd" [plugins."io.containerd.metadata.v1.bolt"] content_sharing_policy = "shared" [plugins."io.containerd.monitor.v1.cgroups"] no_prometheus = false [plugins."io.containerd.nri.v1.nri"] disable = true disable_connections = false plugin_config_path = "/etc/nri/conf.d" plugin_path = "/opt/nri/plugins" plugin_registration_timeout = "5s" plugin_request_timeout = "2s" socket_path = "/var/run/nri/nri.sock" [plugins."io.containerd.runtime.v1.linux"] no_shim = false runtime = "runc" runtime_root = "" shim = "containerd-shim" shim_debug = false [plugins."io.containerd.runtime.v2.task"] platforms = ["linux/amd64"] sched_core = false [plugins."io.containerd.service.v1.diff-service"] default = ["walking"] [plugins."io.containerd.service.v1.tasks-service"] blockio_config_file = "" rdt_config_file = "" [plugins."io.containerd.snapshotter.v1.aufs"] root_path = "" [plugins."io.containerd.snapshotter.v1.blockfile"] fs_type = "" mount_options = [] root_path = "" scratch_file = "" [plugins."io.containerd.snapshotter.v1.btrfs"] root_path = "" [plugins."io.containerd.snapshotter.v1.devmapper"] async_remove = false base_image_size = "" discard_blocks = false fs_options = "" fs_type = "" pool_name = "" root_path = "" [plugins."io.containerd.snapshotter.v1.native"] root_path = "" [plugins."io.containerd.snapshotter.v1.overlayfs"] mount_options = [] root_path = "" sync_remove = false upperdir_label = false [plugins."io.containerd.snapshotter.v1.zfs"] root_path = "" [plugins."io.containerd.tracing.processor.v1.otlp"] endpoint = "" insecure = false protocol = "" [plugins."io.containerd.transfer.v1.local"] config_path = "" max_concurrent_downloads = 3 max_concurrent_uploaded_layers = 3 [[plugins."io.containerd.transfer.v1.local".unpack_config]] differ = "" platform = "linux/amd64" snapshotter = "overlayfs" [proxy_plugins] [stream_processors] [stream_processors."io.containerd.ocicrypt.decoder.v1.tar"] accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar" [stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"] accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar+gzip" [timeouts] "io.containerd.timeout.bolt.open" = "0s" "io.containerd.timeout.metrics.shimstats" = "2s" "io.containerd.timeout.shim.cleanup" = "5s" "io.containerd.timeout.shim.load" = "5s" "io.containerd.timeout.shim.shutdown" = "3s" "io.containerd.timeout.task.state" = "2s" [ttrpc] address = "" gid = 0 uid = 0 2 下载libseccomp-2.5.1版本的软件包wget http://rpmfind.net/linux/centos/8-stream/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm 2.1 安装libseccomp-2.5.1软件包#卸载原来的 rpm -qa | grep libseccomp libseccomp-devel-2.3.1-4.el7.x86_64 libseccomp-2.3.1-4.el7.x86_64 rpm -e libseccomp-devel-2.3.1-4.el7.x86_64 --nodeps rpm -e libseccomp-2.3.1-4.el7.x86_64 --nodeps rpm -ivh libseccomp-2.5.1-1.el8.x86_64.rpm 2.2 检查安装的版本,安装成功啦rpm -qa | grep libseccomp 2.3 安装runc由于上述软件包中包含的runc对系统依赖过多,所以建议单独下载安装。默认runc执行时提示:runc: symbol lookup error: runc: undefined symbol: seccomp_notify_respondwget https://github.com/opencontainers/runc/releases/download/v1.1.10/runc.amd64 chmod +x runc.amd64 替换掉原软件包中的runc mv runc.amd64 /usr/local/sbin/runc # runc -v VERSION: 1.1.10 commit: v1.1.10-0-g18a0cb0f spec: 1.0.2-dev go: go1.20.10 libseccomp: 2.5.1 systemctl enable --now containerd systemctl status containerd -

k8s 1.28高可用搭建 kubelet集群09 1 部署kubelet在k8s-master01上操作 1.1 创建kubelet-bootstrap.kubeconfigBOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv) kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.31.100:6443 --kubeconfig=kubelet-bootstrap.kubeconfig kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfig kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubelet-bootstrap kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig kubectl describe clusterrolebinding cluster-system-anonymous kubectl describe clusterrolebinding kubelet-bootstrap 2 创建kubelet配置文件cat > kubelet.json << "EOF" { "kind": "KubeletConfiguration", "apiVersion": "kubelet.config.k8s.io/v1beta1", "authentication": { "x509": { "clientCAFile": "/etc/kubernetes/ssl/ca.pem" }, "webhook": { "enabled": true, "cacheTTL": "2m0s" }, "anonymous": { "enabled": false } }, "authorization": { "mode": "Webhook", "webhook": { "cacheAuthorizedTTL": "5m0s", "cacheUnauthorizedTTL": "30s" } }, "address": "192.168.31.34", "port": 10250, "readOnlyPort": 10255, "cgroupDriver": "systemd", "hairpinMode": "promiscuous-bridge", "serializeImagePulls": false, "clusterDomain": "cluster.local.", "clusterDNS": ["10.96.0.2"] } EOF 2.1 创建kubelet服务启动管理文件cat > kubelet.service << "EOF" [Unit] Description=Kubernetes Kubelet Documentation=https://github.com/kubernetes/kubernetes After=containerd.service Requires=containerd.service [Service] WorkingDirectory=/var/lib/kubelet ExecStart=/usr/local/bin/kubelet \ --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \ --cert-dir=/etc/kubernetes/ssl \ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \ --config=/etc/kubernetes/kubelet.json \ --cni-conf-dir=/etc/cni/net.d \ --container-runtime=remote \ --container-runtime-endpoint=unix:///run/containerd/containerd.sock \ --network-plugin=cni \ --rotate-certificates \ --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 \ --root-dir=/etc/cni/net.d \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF 2.2 同步文件到集群节点cp kubelet-bootstrap.kubeconfig /etc/kubernetes/ cp kubelet.json /etc/kubernetes/ cp kubelet.service /usr/lib/systemd/system/ for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kubelet-bootstrap.kubeconfig kubelet.json $i:/etc/kubernetes/;done for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp ca.pem $i:/etc/kubernetes/ssl/;done for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kubelet.service $i:/usr/lib/systemd/system/;done 说明: kubelet.json中address需要修改为当前主机IP地址。 2.3 创建目录及启动服务mkdir -p /var/lib/kubelet mkdir -p /var/log/kubernetes systemctl daemon-reload systemctl enable --now kubelet systemctl status kubelet 报错[root@k8s-master01 k8s-work]# systemctl status kubelet -l ● kubelet.service - Kubernetes Kubelet Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled) Active: activating (auto-restart) (Result: exit-code) since Tue 2023-11-21 21:20:18 CST; 4s ago Docs: https://github.com/kubernetes/kubernetes Process: 9376 ExecStart=/usr/local/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig --cert-dir=/etc/kubernetes/ssl --kubeconfig=/etc/kubernetes/kubelet.kubeconfig --config=/etc/kubernetes/kubelet.json --container-runtime-endpoint=unix:///run/containerd/containerd.sock --rotate-certificates --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 --root-dir=/etc/cni/net.d --v=2 (code=exited, status=1/FAILURE) Main PID: 9376 (code=exited, status=1/FAILURE) Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.983936 9376 server.go:895] "Client rotation is on, will bootstrap in background" Nov 21 21:20:18 k8s-master01 kubelet[9376]: E1121 21:20:18.984353 9376 bootstrap.go:241] unable to read existing bootstrap client config from /etc/kubernetes/kubelet.kubeconfig: invalid configuration: [unable to read client-cert /etc/kubernetes/ssl/kubelet-client-current.pem for default-auth due to open /etc/kubernetes/ssl/kubelet-client-current.pem: no such file or directory, unable to read client-key /etc/kubernetes/ssl/kubelet-client-current.pem for default-auth due to open /etc/kubernetes/ssl/kubelet-client-current.pem: no such file or directory] Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985122 9376 bootstrap.go:101] "Use the bootstrap credentials to request a cert, and set kubeconfig to point to the certificate dir" Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985241 9376 server.go:952] "Starting client certificate rotation" Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985250 9376 certificate_manager.go:356] kubernetes.io/kube-apiserver-client-kubelet: Certificate rotation is enabled Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985472 9376 certificate_manager.go:356] kubernetes.io/kube-apiserver-client-kubelet: Rotating certificates Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985506 9376 dynamic_cafile_content.go:119] "Loaded a new CA Bundle and Verifier" name="client-ca-bundle::/etc/kubernetes/ssl/ca.pem" Nov 21 21:20:18 k8s-master01 systemd[1]: kubelet.service failed. Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985703 9376 dynamic_cafile_content.go:157] "Starting controller" name="client-ca-bundle::/etc/kubernetes/ssl/ca.pem" Nov 21 21:20:18 k8s-master01 kubelet[9376]: E1121 21:20:18.988960 9376 run.go:74] "command failed" err="failed to run Kubelet: validate service connection: validate CRI v1 runtime API for endpoint \"unix:///run/containerd/containerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.RuntimeService" 报错关键点查看containerd信息root@k8s-master01 k8s-work]# journalctl -f -u containerd.service -- Logs begin at Tue 2023-11-21 18:23:41 CST. -- Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.984785204+08:00" level=info msg="loading plugtainerd.grpc.v1.version\"..." type=io.containerd.grpc.v1 Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.984794914+08:00" level=info msg="loading plugtainerd.grpc.v1.cri\"..." type=io.containerd.grpc.v1 Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.984984614+08:00" level=warning msg="failed ton io.containerd.grpc.v1.cri" error="invalid plugin config: `systemd_cgroup` only works for runtime io.containerd.runtim Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.984999254+08:00" level=info msg="loading plugtainerd.tracing.processor.v1.otlp\"..." type=io.containerd.tracing.processor.v1 Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985012723+08:00" level=info msg="skip loadingo.containerd.tracing.processor.v1.otlp\"..." error="no OpenTelemetry endpoint: skip plugin" type=io.containerd.tracing.1 Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985020034+08:00" level=info msg="loading plugtainerd.internal.v1.tracing\"..." type=io.containerd.internal.v1 Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985031233+08:00" level=info msg="skipping trasor initialization (no tracing plugin)" error="no OpenTelemetry endpoint: skip plugin" Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985226773+08:00" level=info msg=serving... adcontainerd/containerd.sock.ttrpc Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985249673+08:00" level=info msg=serving... adcontainerd/containerd.sock Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985291982+08:00" level=info msg="containerd s booted in 0.022170s" 提取关键报错time="2023-11-21T21:35:47.984984614+08:00" level=warning msg="failed ton io.containerd.grpc.v1.cri" error="invalid plugin config: `systemd_cgroup` only works for runtime io.containerd.runtim 结论测试了各种方法发现都不行 索性来个彻底的 有知道的童鞋告诉我下哈 感激不尽 mv /etc/containerd/config.toml /root/config.toml.bak systemctl restart containerd # kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master1 NotReady <none> 2m55s v1.21.10 k8s-master2 NotReady <none> 45s v1.21.10 k8s-master3 NotReady <none> 39s v1.21.10 k8s-worker1 NotReady <none> 5m1s v1.21.10 # kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION csr-b949p 7m55s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued csr-c9hs4 3m34s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued csr-r8vhp 5m50s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued csr-zb4sr 3m40s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued 说明: 确认kubelet服务启动成功后,接着到master上Approve一下bootstrap请求。 [root@k8s-master01 k8s-work]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 Ready <none> 62m v1.28.4 k8s-master02 Ready <none> 46m v1.28.4 k8s-master03 Ready <none> 5m51s v1.28.4 k8s-node01 Ready <none> 4m58s v1.28.4 k8s-node02 Ready <none> 2m33s v1.28.4

k8s 1.28高可用搭建 kubelet集群09 1 部署kubelet在k8s-master01上操作 1.1 创建kubelet-bootstrap.kubeconfigBOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv) kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.31.100:6443 --kubeconfig=kubelet-bootstrap.kubeconfig kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfig kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubelet-bootstrap kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig kubectl describe clusterrolebinding cluster-system-anonymous kubectl describe clusterrolebinding kubelet-bootstrap 2 创建kubelet配置文件cat > kubelet.json << "EOF" { "kind": "KubeletConfiguration", "apiVersion": "kubelet.config.k8s.io/v1beta1", "authentication": { "x509": { "clientCAFile": "/etc/kubernetes/ssl/ca.pem" }, "webhook": { "enabled": true, "cacheTTL": "2m0s" }, "anonymous": { "enabled": false } }, "authorization": { "mode": "Webhook", "webhook": { "cacheAuthorizedTTL": "5m0s", "cacheUnauthorizedTTL": "30s" } }, "address": "192.168.31.34", "port": 10250, "readOnlyPort": 10255, "cgroupDriver": "systemd", "hairpinMode": "promiscuous-bridge", "serializeImagePulls": false, "clusterDomain": "cluster.local.", "clusterDNS": ["10.96.0.2"] } EOF 2.1 创建kubelet服务启动管理文件cat > kubelet.service << "EOF" [Unit] Description=Kubernetes Kubelet Documentation=https://github.com/kubernetes/kubernetes After=containerd.service Requires=containerd.service [Service] WorkingDirectory=/var/lib/kubelet ExecStart=/usr/local/bin/kubelet \ --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \ --cert-dir=/etc/kubernetes/ssl \ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \ --config=/etc/kubernetes/kubelet.json \ --cni-conf-dir=/etc/cni/net.d \ --container-runtime=remote \ --container-runtime-endpoint=unix:///run/containerd/containerd.sock \ --network-plugin=cni \ --rotate-certificates \ --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 \ --root-dir=/etc/cni/net.d \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF 2.2 同步文件到集群节点cp kubelet-bootstrap.kubeconfig /etc/kubernetes/ cp kubelet.json /etc/kubernetes/ cp kubelet.service /usr/lib/systemd/system/ for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kubelet-bootstrap.kubeconfig kubelet.json $i:/etc/kubernetes/;done for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp ca.pem $i:/etc/kubernetes/ssl/;done for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kubelet.service $i:/usr/lib/systemd/system/;done 说明: kubelet.json中address需要修改为当前主机IP地址。 2.3 创建目录及启动服务mkdir -p /var/lib/kubelet mkdir -p /var/log/kubernetes systemctl daemon-reload systemctl enable --now kubelet systemctl status kubelet 报错[root@k8s-master01 k8s-work]# systemctl status kubelet -l ● kubelet.service - Kubernetes Kubelet Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled) Active: activating (auto-restart) (Result: exit-code) since Tue 2023-11-21 21:20:18 CST; 4s ago Docs: https://github.com/kubernetes/kubernetes Process: 9376 ExecStart=/usr/local/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig --cert-dir=/etc/kubernetes/ssl --kubeconfig=/etc/kubernetes/kubelet.kubeconfig --config=/etc/kubernetes/kubelet.json --container-runtime-endpoint=unix:///run/containerd/containerd.sock --rotate-certificates --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 --root-dir=/etc/cni/net.d --v=2 (code=exited, status=1/FAILURE) Main PID: 9376 (code=exited, status=1/FAILURE) Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.983936 9376 server.go:895] "Client rotation is on, will bootstrap in background" Nov 21 21:20:18 k8s-master01 kubelet[9376]: E1121 21:20:18.984353 9376 bootstrap.go:241] unable to read existing bootstrap client config from /etc/kubernetes/kubelet.kubeconfig: invalid configuration: [unable to read client-cert /etc/kubernetes/ssl/kubelet-client-current.pem for default-auth due to open /etc/kubernetes/ssl/kubelet-client-current.pem: no such file or directory, unable to read client-key /etc/kubernetes/ssl/kubelet-client-current.pem for default-auth due to open /etc/kubernetes/ssl/kubelet-client-current.pem: no such file or directory] Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985122 9376 bootstrap.go:101] "Use the bootstrap credentials to request a cert, and set kubeconfig to point to the certificate dir" Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985241 9376 server.go:952] "Starting client certificate rotation" Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985250 9376 certificate_manager.go:356] kubernetes.io/kube-apiserver-client-kubelet: Certificate rotation is enabled Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985472 9376 certificate_manager.go:356] kubernetes.io/kube-apiserver-client-kubelet: Rotating certificates Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985506 9376 dynamic_cafile_content.go:119] "Loaded a new CA Bundle and Verifier" name="client-ca-bundle::/etc/kubernetes/ssl/ca.pem" Nov 21 21:20:18 k8s-master01 systemd[1]: kubelet.service failed. Nov 21 21:20:18 k8s-master01 kubelet[9376]: I1121 21:20:18.985703 9376 dynamic_cafile_content.go:157] "Starting controller" name="client-ca-bundle::/etc/kubernetes/ssl/ca.pem" Nov 21 21:20:18 k8s-master01 kubelet[9376]: E1121 21:20:18.988960 9376 run.go:74] "command failed" err="failed to run Kubelet: validate service connection: validate CRI v1 runtime API for endpoint \"unix:///run/containerd/containerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.RuntimeService" 报错关键点查看containerd信息root@k8s-master01 k8s-work]# journalctl -f -u containerd.service -- Logs begin at Tue 2023-11-21 18:23:41 CST. -- Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.984785204+08:00" level=info msg="loading plugtainerd.grpc.v1.version\"..." type=io.containerd.grpc.v1 Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.984794914+08:00" level=info msg="loading plugtainerd.grpc.v1.cri\"..." type=io.containerd.grpc.v1 Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.984984614+08:00" level=warning msg="failed ton io.containerd.grpc.v1.cri" error="invalid plugin config: `systemd_cgroup` only works for runtime io.containerd.runtim Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.984999254+08:00" level=info msg="loading plugtainerd.tracing.processor.v1.otlp\"..." type=io.containerd.tracing.processor.v1 Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985012723+08:00" level=info msg="skip loadingo.containerd.tracing.processor.v1.otlp\"..." error="no OpenTelemetry endpoint: skip plugin" type=io.containerd.tracing.1 Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985020034+08:00" level=info msg="loading plugtainerd.internal.v1.tracing\"..." type=io.containerd.internal.v1 Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985031233+08:00" level=info msg="skipping trasor initialization (no tracing plugin)" error="no OpenTelemetry endpoint: skip plugin" Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985226773+08:00" level=info msg=serving... adcontainerd/containerd.sock.ttrpc Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985249673+08:00" level=info msg=serving... adcontainerd/containerd.sock Nov 21 21:35:47 k8s-master01 containerd[11532]: time="2023-11-21T21:35:47.985291982+08:00" level=info msg="containerd s booted in 0.022170s" 提取关键报错time="2023-11-21T21:35:47.984984614+08:00" level=warning msg="failed ton io.containerd.grpc.v1.cri" error="invalid plugin config: `systemd_cgroup` only works for runtime io.containerd.runtim 结论测试了各种方法发现都不行 索性来个彻底的 有知道的童鞋告诉我下哈 感激不尽 mv /etc/containerd/config.toml /root/config.toml.bak systemctl restart containerd # kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master1 NotReady <none> 2m55s v1.21.10 k8s-master2 NotReady <none> 45s v1.21.10 k8s-master3 NotReady <none> 39s v1.21.10 k8s-worker1 NotReady <none> 5m1s v1.21.10 # kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION csr-b949p 7m55s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued csr-c9hs4 3m34s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued csr-r8vhp 5m50s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued csr-zb4sr 3m40s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued 说明: 确认kubelet服务启动成功后,接着到master上Approve一下bootstrap请求。 [root@k8s-master01 k8s-work]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 Ready <none> 62m v1.28.4 k8s-master02 Ready <none> 46m v1.28.4 k8s-master03 Ready <none> 5m51s v1.28.4 k8s-node01 Ready <none> 4m58s v1.28.4 k8s-node02 Ready <none> 2m33s v1.28.4 -

k8s 1.28高可用搭建 kube-proxy集群10 1 部署kube-proxy1.1 创建kube-proxy证书请求文件cat > kube-proxy-csr.json << "EOF" { "CN": "system:kube-proxy", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "kubemsb", "OU": "CN" } ] } EOF 1.2 生成证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy # ls kube-proxy* kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem 1.3 创建kubeconfig文件kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.31.100:6443 --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig 1.4 创建服务配置文件cat > kube-proxy.yaml << "EOF" apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 192.168.31.32 clientConnection: kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig clusterCIDR: 10.244.0.0/16 healthzBindAddress: 192.168.31.32:10256 kind: KubeProxyConfiguration metricsBindAddress: 192.168.31.32:10249 mode: "ipvs" EOF 1.5 创建服务启动管理文件cat > kube-proxy.service << "EOF" [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] WorkingDirectory=/var/lib/kube-proxy ExecStart=/usr/local/bin/kube-proxy \ --config=/etc/kubernetes/kube-proxy.yaml \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2 Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF 1.6 同步文件到集群工作节点主机cp kubernetes/server/bin/kube-proxy /usr/local/bin/ cp kube-proxy*.pem /etc/kubernetes/ssl/ cp kube-proxy.kubeconfig kube-proxy.yaml /etc/kubernetes/ cp kube-proxy.service /usr/lib/systemd/system/ for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kubernetes/server/bin/kube-proxy $i:/usr/local/bin/;done for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kube-proxy*.pem $i:/etc/kubernetes/ssl;done for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kube-proxy.kubeconfig kube-proxy.yaml $i:/etc/kubernetes/;done for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kube-proxy.service $i:/usr/lib/systemd/system/;done 说明: 修改kube-proxy.yaml中IP地址为当前主机IP. 1.7 服务启动mkdir -p /var/lib/kube-proxy systemctl daemon-reload systemctl enable --now kube-proxy systemctl status kube-proxy

k8s 1.28高可用搭建 kube-proxy集群10 1 部署kube-proxy1.1 创建kube-proxy证书请求文件cat > kube-proxy-csr.json << "EOF" { "CN": "system:kube-proxy", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "kubemsb", "OU": "CN" } ] } EOF 1.2 生成证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy # ls kube-proxy* kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem 1.3 创建kubeconfig文件kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.31.100:6443 --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig 1.4 创建服务配置文件cat > kube-proxy.yaml << "EOF" apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 192.168.31.32 clientConnection: kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig clusterCIDR: 10.244.0.0/16 healthzBindAddress: 192.168.31.32:10256 kind: KubeProxyConfiguration metricsBindAddress: 192.168.31.32:10249 mode: "ipvs" EOF 1.5 创建服务启动管理文件cat > kube-proxy.service << "EOF" [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] WorkingDirectory=/var/lib/kube-proxy ExecStart=/usr/local/bin/kube-proxy \ --config=/etc/kubernetes/kube-proxy.yaml \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2 Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF 1.6 同步文件到集群工作节点主机cp kubernetes/server/bin/kube-proxy /usr/local/bin/ cp kube-proxy*.pem /etc/kubernetes/ssl/ cp kube-proxy.kubeconfig kube-proxy.yaml /etc/kubernetes/ cp kube-proxy.service /usr/lib/systemd/system/ for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kubernetes/server/bin/kube-proxy $i:/usr/local/bin/;done for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kube-proxy*.pem $i:/etc/kubernetes/ssl;done for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kube-proxy.kubeconfig kube-proxy.yaml $i:/etc/kubernetes/;done for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kube-proxy.service $i:/usr/lib/systemd/system/;done 说明: 修改kube-proxy.yaml中IP地址为当前主机IP. 1.7 服务启动mkdir -p /var/lib/kube-proxy systemctl daemon-reload systemctl enable --now kube-proxy systemctl status kube-proxy -

k8s 1.28高可用搭建Calico集群11 1 网络组件部署 Calico1.1 下载 https://docs.tigera.io/calico/latest/getting-started/kubernetes/self-managed-onprem/onpremises kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.4/manifests/tigera-operator.yaml curl https://raw.githubusercontent.com/projectcalico/calico/v3.26.4/manifests/custom-resources.yaml -O 1.2 修改文件# This section includes base Calico installation configuration. # For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.Installation apiVersion: operator.tigera.io/v1 kind: Installation metadata: name: default spec: # Configures Calico networking. calicoNetwork: # Note: The ipPools section cannot be modified post-install. ipPools: - blockSize: 26 cidr: 10.244.0.0/16 encapsulation: VXLANCrossSubnet natOutgoing: Enabled nodeSelector: all() --- # This section configures the Calico API server. # For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.APIServer apiVersion: operator.tigera.io/v1 kind: APIServer metadata: name: default spec: {} 1.3 应用文件mkdir -p /var/lib/kubelet/plugins_registry kubectl apply -f custom-resources.yaml 1.4 验证应用结果[root@k8s-master01 k8s-work]# kubectl get pods -A -w NAMESPACE NAME READY STATUS RESTARTS AGE calico-apiserver calico-apiserver-86cf84fd44-lgq4f 1/1 Running 0 2m58s calico-apiserver calico-apiserver-86cf84fd44-r9z9x 1/1 Running 0 2m57s calico-system calico-kube-controllers-5464c5f856-9wc27 1/1 Running 0 25m calico-system calico-node-2rc2k 1/1 Running 0 25m calico-system calico-node-jn49m 1/1 Running 0 25m calico-system calico-node-qh9cn 1/1 Running 0 25m calico-system calico-node-x8ws2 1/1 Running 0 25m calico-system calico-node-zdnfb 1/1 Running 0 25m calico-system calico-typha-56f47497f9-pf9qg 1/1 Running 0 25m calico-system calico-typha-56f47497f9-wsbz9 1/1 Running 0 25m calico-system calico-typha-56f47497f9-x48r5 1/1 Running 0 25m calico-system csi-node-driver-7dz9r 2/2 Running 0 25m calico-system csi-node-driver-g9wl9 2/2 Running 0 25m calico-system csi-node-driver-hr7d8 2/2 Running 0 25m calico-system csi-node-driver-lrqb9 2/2 Running 0 25m calico-system csi-node-driver-z76d9 2/2 Running 0 25m tigera-operator tigera-operator-7f8cd97876-gn9fb 1/1 Running 0 52m [root@k8s-master01 k8s-work]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 Ready <none> 25h v1.28.4 k8s-master02 Ready <none> 25h v1.28.4 k8s-master03 Ready <none> 24h v1.28.4 k8s-node01 Ready <none> 24h v1.28.4 k8s-node02 Ready <none> 24h v1.28.4

k8s 1.28高可用搭建Calico集群11 1 网络组件部署 Calico1.1 下载 https://docs.tigera.io/calico/latest/getting-started/kubernetes/self-managed-onprem/onpremises kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.4/manifests/tigera-operator.yaml curl https://raw.githubusercontent.com/projectcalico/calico/v3.26.4/manifests/custom-resources.yaml -O 1.2 修改文件# This section includes base Calico installation configuration. # For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.Installation apiVersion: operator.tigera.io/v1 kind: Installation metadata: name: default spec: # Configures Calico networking. calicoNetwork: # Note: The ipPools section cannot be modified post-install. ipPools: - blockSize: 26 cidr: 10.244.0.0/16 encapsulation: VXLANCrossSubnet natOutgoing: Enabled nodeSelector: all() --- # This section configures the Calico API server. # For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.APIServer apiVersion: operator.tigera.io/v1 kind: APIServer metadata: name: default spec: {} 1.3 应用文件mkdir -p /var/lib/kubelet/plugins_registry kubectl apply -f custom-resources.yaml 1.4 验证应用结果[root@k8s-master01 k8s-work]# kubectl get pods -A -w NAMESPACE NAME READY STATUS RESTARTS AGE calico-apiserver calico-apiserver-86cf84fd44-lgq4f 1/1 Running 0 2m58s calico-apiserver calico-apiserver-86cf84fd44-r9z9x 1/1 Running 0 2m57s calico-system calico-kube-controllers-5464c5f856-9wc27 1/1 Running 0 25m calico-system calico-node-2rc2k 1/1 Running 0 25m calico-system calico-node-jn49m 1/1 Running 0 25m calico-system calico-node-qh9cn 1/1 Running 0 25m calico-system calico-node-x8ws2 1/1 Running 0 25m calico-system calico-node-zdnfb 1/1 Running 0 25m calico-system calico-typha-56f47497f9-pf9qg 1/1 Running 0 25m calico-system calico-typha-56f47497f9-wsbz9 1/1 Running 0 25m calico-system calico-typha-56f47497f9-x48r5 1/1 Running 0 25m calico-system csi-node-driver-7dz9r 2/2 Running 0 25m calico-system csi-node-driver-g9wl9 2/2 Running 0 25m calico-system csi-node-driver-hr7d8 2/2 Running 0 25m calico-system csi-node-driver-lrqb9 2/2 Running 0 25m calico-system csi-node-driver-z76d9 2/2 Running 0 25m tigera-operator tigera-operator-7f8cd97876-gn9fb 1/1 Running 0 52m [root@k8s-master01 k8s-work]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 Ready <none> 25h v1.28.4 k8s-master02 Ready <none> 25h v1.28.4 k8s-master03 Ready <none> 24h v1.28.4 k8s-node01 Ready <none> 24h v1.28.4 k8s-node02 Ready <none> 24h v1.28.4 -

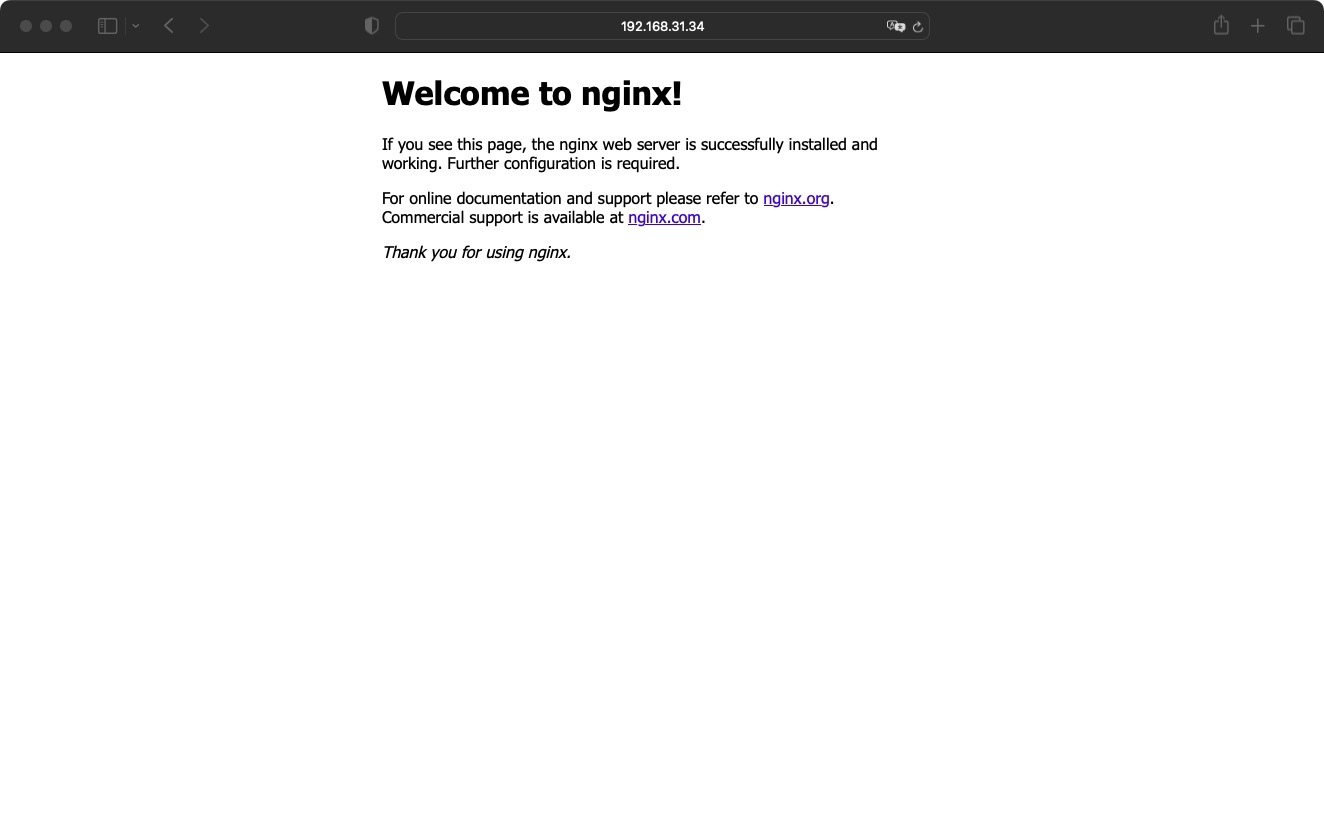

k8s 1.28高可用搭建CoreDns集群12 1 部署CoreDNScat > coredns.yaml << "EOF" apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system --- apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system data: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes cluster.local in-addr.arpa ip6.arpa { fallthrough in-addr.arpa ip6.arpa } prometheus :9153 forward . /etc/resolv.conf { max_concurrent 1000 } cache 30 loop reload loadbalance } --- apiVersion: apps/v1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/name: "CoreDNS" spec: # replicas: not specified here: # 1. Default is 1. # 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on. strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns spec: priorityClassName: system-cluster-critical serviceAccountName: coredns tolerations: - key: "CriticalAddonsOnly" operator: "Exists" nodeSelector: kubernetes.io/os: linux affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: k8s-app operator: In values: ["kube-dns"] topologyKey: kubernetes.io/hostname containers: - name: coredns image: coredns/coredns:1.11.1 imagePullPolicy: IfNotPresent resources: limits: memory: 170Mi requests: cpu: 100m memory: 70Mi args: [ "-conf", "/etc/coredns/Corefile" ] volumeMounts: - name: config-volume mountPath: /etc/coredns readOnly: true ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - all readOnlyRootFilesystem: true livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /ready port: 8181 scheme: HTTP dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile --- apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system annotations: prometheus.io/port: "9153" prometheus.io/scrape: "true" labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" spec: selector: k8s-app: kube-dns clusterIP: 10.96.0.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP - name: metrics port: 9153 protocol: TCP EOF kubectl apply -f coredns.yaml # kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-7cc8dd57d9-tf2m5 1/1 Running 0 4m7s kube-system calico-node-llw5w 1/1 Running 0 4m7s kube-system calico-node-mhh6g 1/1 Running 0 4m7s kube-system calico-node-twj99 1/1 Running 0 4m7s kube-system calico-node-zh6xl 1/1 Running 0 4m7s kube-system coredns-675db8b7cc-ncnf6 1/1 Running 0 26s 2.5.11 部署应用验证cat > nginx.yaml << "EOF" --- apiVersion: v1 kind: ReplicationController metadata: name: nginx-web spec: replicas: 2 selector: name: nginx template: metadata: labels: name: nginx spec: containers: - name: nginx image: nginx:1.19.6 ports: - containerPort: 80 --- apiVersion: v1 kind: Service metadata: name: nginx-service-nodeport spec: ports: - port: 80 targetPort: 80 nodePort: 30001 protocol: TCP type: NodePort selector: name: nginx EOF kubectl apply -f nginx.yaml [root@k8s-master01 k8s-work]# kubectl get pods -A -w -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-apiserver calico-apiserver-86cf84fd44-lgq4f 1/1 Running 0 7m31s 10.244.58.193 k8s-node02 <none> <none> calico-apiserver calico-apiserver-86cf84fd44-r9z9x 1/1 Running 0 7m30s 10.244.195.1 k8s-master03 <none> <none> calico-system calico-kube-controllers-5464c5f856-9wc27 1/1 Running 0 30m 10.88.0.2 k8s-master03 <none> <none> calico-system calico-node-2rc2k 1/1 Running 0 30m 192.168.31.34 k8s-master01 <none> <none> calico-system calico-node-jn49m 1/1 Running 0 30m 192.168.31.38 k8s-node02 <none> <none> calico-system calico-node-qh9cn 1/1 Running 0 30m 192.168.31.37 k8s-node01 <none> <none> calico-system calico-node-x8ws2 1/1 Running 0 30m 192.168.31.36 k8s-master03 <none> <none> calico-system calico-node-zdnfb 1/1 Running 0 30m 192.168.31.35 k8s-master02 <none> <none> calico-system calico-typha-56f47497f9-pf9qg 1/1 Running 0 30m 192.168.31.34 k8s-master01 <none> <none> calico-system calico-typha-56f47497f9-wsbz9 1/1 Running 0 30m 192.168.31.35 k8s-master02 <none> <none> calico-system calico-typha-56f47497f9-x48r5 1/1 Running 0 30m 192.168.31.38 k8s-node02 <none> <none> calico-system csi-node-driver-7dz9r 2/2 Running 0 30m 10.244.58.194 k8s-node02 <none> <none> calico-system csi-node-driver-g9wl9 2/2 Running 0 30m 10.244.195.2 k8s-master03 <none> <none> calico-system csi-node-driver-hr7d8 2/2 Running 0 30m 10.244.32.129 k8s-master01 <none> <none> calico-system csi-node-driver-lrqb9 2/2 Running 0 30m 10.244.122.129 k8s-master02 <none> <none> calico-system csi-node-driver-z76d9 2/2 Running 0 30m 10.244.85.193 k8s-node01 <none> <none> default nginx-web-2qzth 1/1 Running 0 46s 10.244.32.131 k8s-master01 <none> <none> default nginx-web-v6srq 1/1 Running 0 46s 10.244.85.194 k8s-node01 <none> <none> kube-system coredns-7dbfc4968f-lsrfl 1/1 Running 0 2m9s 10.244.32.130 k8s-master01 <none> <none> kube-system coredns-7dbfc4968f-xsx78 1/1 Running 0 3m36s 10.244.122.130 k8s-master02 <none> <none> tigera-operator tigera-operator-7f8cd97876-gn9fb 1/1 Running 0 56m 192.168.31.37 k8s-node01 <none> <none> [root@k8s-master01 k8s-work]# kubectl get all NAME READY STATUS RESTARTS AGE pod/nginx-web-2qzth 1/1 Running 0 68s pod/nginx-web-v6srq 1/1 Running 0 68s NAME DESIRED CURRENT READY AGE replicationcontroller/nginx-web 2 2 2 68s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d3h service/nginx-service-nodeport NodePort 10.96.65.129 <none> 80:30001/TCP 68s svc 验证[root@k8s-master01 k8s-work]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d3h nginx-service-nodeport NodePort 10.96.65.129 <none> 80:30001/TCP 2m5s [root@k8s-master01 k8s-work]# curl 10.96.65.129 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> 浏览器验证

k8s 1.28高可用搭建CoreDns集群12 1 部署CoreDNScat > coredns.yaml << "EOF" apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system --- apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system data: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes cluster.local in-addr.arpa ip6.arpa { fallthrough in-addr.arpa ip6.arpa } prometheus :9153 forward . /etc/resolv.conf { max_concurrent 1000 } cache 30 loop reload loadbalance } --- apiVersion: apps/v1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/name: "CoreDNS" spec: # replicas: not specified here: # 1. Default is 1. # 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on. strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns spec: priorityClassName: system-cluster-critical serviceAccountName: coredns tolerations: - key: "CriticalAddonsOnly" operator: "Exists" nodeSelector: kubernetes.io/os: linux affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: k8s-app operator: In values: ["kube-dns"] topologyKey: kubernetes.io/hostname containers: - name: coredns image: coredns/coredns:1.11.1 imagePullPolicy: IfNotPresent resources: limits: memory: 170Mi requests: cpu: 100m memory: 70Mi args: [ "-conf", "/etc/coredns/Corefile" ] volumeMounts: - name: config-volume mountPath: /etc/coredns readOnly: true ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - all readOnlyRootFilesystem: true livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /ready port: 8181 scheme: HTTP dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile --- apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system annotations: prometheus.io/port: "9153" prometheus.io/scrape: "true" labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" spec: selector: k8s-app: kube-dns clusterIP: 10.96.0.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP - name: metrics port: 9153 protocol: TCP EOF kubectl apply -f coredns.yaml # kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-7cc8dd57d9-tf2m5 1/1 Running 0 4m7s kube-system calico-node-llw5w 1/1 Running 0 4m7s kube-system calico-node-mhh6g 1/1 Running 0 4m7s kube-system calico-node-twj99 1/1 Running 0 4m7s kube-system calico-node-zh6xl 1/1 Running 0 4m7s kube-system coredns-675db8b7cc-ncnf6 1/1 Running 0 26s 2.5.11 部署应用验证cat > nginx.yaml << "EOF" --- apiVersion: v1 kind: ReplicationController metadata: name: nginx-web spec: replicas: 2 selector: name: nginx template: metadata: labels: name: nginx spec: containers: - name: nginx image: nginx:1.19.6 ports: - containerPort: 80 --- apiVersion: v1 kind: Service metadata: name: nginx-service-nodeport spec: ports: - port: 80 targetPort: 80 nodePort: 30001 protocol: TCP type: NodePort selector: name: nginx EOF kubectl apply -f nginx.yaml [root@k8s-master01 k8s-work]# kubectl get pods -A -w -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-apiserver calico-apiserver-86cf84fd44-lgq4f 1/1 Running 0 7m31s 10.244.58.193 k8s-node02 <none> <none> calico-apiserver calico-apiserver-86cf84fd44-r9z9x 1/1 Running 0 7m30s 10.244.195.1 k8s-master03 <none> <none> calico-system calico-kube-controllers-5464c5f856-9wc27 1/1 Running 0 30m 10.88.0.2 k8s-master03 <none> <none> calico-system calico-node-2rc2k 1/1 Running 0 30m 192.168.31.34 k8s-master01 <none> <none> calico-system calico-node-jn49m 1/1 Running 0 30m 192.168.31.38 k8s-node02 <none> <none> calico-system calico-node-qh9cn 1/1 Running 0 30m 192.168.31.37 k8s-node01 <none> <none> calico-system calico-node-x8ws2 1/1 Running 0 30m 192.168.31.36 k8s-master03 <none> <none> calico-system calico-node-zdnfb 1/1 Running 0 30m 192.168.31.35 k8s-master02 <none> <none> calico-system calico-typha-56f47497f9-pf9qg 1/1 Running 0 30m 192.168.31.34 k8s-master01 <none> <none> calico-system calico-typha-56f47497f9-wsbz9 1/1 Running 0 30m 192.168.31.35 k8s-master02 <none> <none> calico-system calico-typha-56f47497f9-x48r5 1/1 Running 0 30m 192.168.31.38 k8s-node02 <none> <none> calico-system csi-node-driver-7dz9r 2/2 Running 0 30m 10.244.58.194 k8s-node02 <none> <none> calico-system csi-node-driver-g9wl9 2/2 Running 0 30m 10.244.195.2 k8s-master03 <none> <none> calico-system csi-node-driver-hr7d8 2/2 Running 0 30m 10.244.32.129 k8s-master01 <none> <none> calico-system csi-node-driver-lrqb9 2/2 Running 0 30m 10.244.122.129 k8s-master02 <none> <none> calico-system csi-node-driver-z76d9 2/2 Running 0 30m 10.244.85.193 k8s-node01 <none> <none> default nginx-web-2qzth 1/1 Running 0 46s 10.244.32.131 k8s-master01 <none> <none> default nginx-web-v6srq 1/1 Running 0 46s 10.244.85.194 k8s-node01 <none> <none> kube-system coredns-7dbfc4968f-lsrfl 1/1 Running 0 2m9s 10.244.32.130 k8s-master01 <none> <none> kube-system coredns-7dbfc4968f-xsx78 1/1 Running 0 3m36s 10.244.122.130 k8s-master02 <none> <none> tigera-operator tigera-operator-7f8cd97876-gn9fb 1/1 Running 0 56m 192.168.31.37 k8s-node01 <none> <none> [root@k8s-master01 k8s-work]# kubectl get all NAME READY STATUS RESTARTS AGE pod/nginx-web-2qzth 1/1 Running 0 68s pod/nginx-web-v6srq 1/1 Running 0 68s NAME DESIRED CURRENT READY AGE replicationcontroller/nginx-web 2 2 2 68s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d3h service/nginx-service-nodeport NodePort 10.96.65.129 <none> 80:30001/TCP 68s svc 验证[root@k8s-master01 k8s-work]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d3h nginx-service-nodeport NodePort 10.96.65.129 <none> 80:30001/TCP 2m5s [root@k8s-master01 k8s-work]# curl 10.96.65.129 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> 浏览器验证 -

k8s 1.28高可用搭建kube-scheduler集群07 1 创建kube-scheduler证书请求文件cat > kube-scheduler-csr.json << "EOF" { "CN": "system:kube-scheduler", "hosts": [ "127.0.0.1", "192.168.31.34", "192.168.31.35", "192.168.31.36" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "system:kube-scheduler", "OU": "system" } ] } EOF 2.5.8.2 生成kube-scheduler证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler # ls kube-scheduler.csr kube-scheduler-csr.json kube-scheduler-key.pem kube-scheduler.pem 2.5.8.3 创建kube-scheduler的kubeconfigkubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.31.100:6443 --kubeconfig=kube-scheduler.kubeconfig kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig 2.5.8.4 创建服务配置文件cat > kube-scheduler.conf << "EOF" KUBE_SCHEDULER_OPTS="--bind-address=127.0.0.1 \ --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \ --leader-elect=true \ --v=2" EOF 2.5.8.5创建服务启动配置文件cat > kube-scheduler.service << "EOF" [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/etc/kubernetes/kube-scheduler.conf ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF 2.5.8.6 同步文件至集群master节点cp kube-scheduler*.pem /etc/kubernetes/ssl/ cp kube-scheduler.kubeconfig /etc/kubernetes/ cp kube-scheduler.conf /etc/kubernetes/ cp kube-scheduler.service /usr/lib/systemd/system/ scp kube-scheduler*.pem k8s-master02:/etc/kubernetes/ssl/ scp kube-scheduler*.pem k8s-master03:/etc/kubernetes/ssl/ scp kube-scheduler.kubeconfig kube-scheduler.conf k8s-master02:/etc/kubernetes/ scp kube-scheduler.kubeconfig kube-scheduler.conf k8s-master03:/etc/kubernetes/ scp kube-scheduler.service k8s-master02:/usr/lib/systemd/system/ scp kube-scheduler.service k8s-master03:/usr/lib/systemd/system/ 2.5.8.7 启动服务systemctl daemon-reload systemctl enable --now kube-scheduler systemctl status kube-scheduler

k8s 1.28高可用搭建kube-scheduler集群07 1 创建kube-scheduler证书请求文件cat > kube-scheduler-csr.json << "EOF" { "CN": "system:kube-scheduler", "hosts": [ "127.0.0.1", "192.168.31.34", "192.168.31.35", "192.168.31.36" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "system:kube-scheduler", "OU": "system" } ] } EOF 2.5.8.2 生成kube-scheduler证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler # ls kube-scheduler.csr kube-scheduler-csr.json kube-scheduler-key.pem kube-scheduler.pem 2.5.8.3 创建kube-scheduler的kubeconfigkubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.31.100:6443 --kubeconfig=kube-scheduler.kubeconfig kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig 2.5.8.4 创建服务配置文件cat > kube-scheduler.conf << "EOF" KUBE_SCHEDULER_OPTS="--bind-address=127.0.0.1 \ --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \ --leader-elect=true \ --v=2" EOF 2.5.8.5创建服务启动配置文件cat > kube-scheduler.service << "EOF" [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/etc/kubernetes/kube-scheduler.conf ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF 2.5.8.6 同步文件至集群master节点cp kube-scheduler*.pem /etc/kubernetes/ssl/ cp kube-scheduler.kubeconfig /etc/kubernetes/ cp kube-scheduler.conf /etc/kubernetes/ cp kube-scheduler.service /usr/lib/systemd/system/ scp kube-scheduler*.pem k8s-master02:/etc/kubernetes/ssl/ scp kube-scheduler*.pem k8s-master03:/etc/kubernetes/ssl/ scp kube-scheduler.kubeconfig kube-scheduler.conf k8s-master02:/etc/kubernetes/ scp kube-scheduler.kubeconfig kube-scheduler.conf k8s-master03:/etc/kubernetes/ scp kube-scheduler.service k8s-master02:/usr/lib/systemd/system/ scp kube-scheduler.service k8s-master03:/usr/lib/systemd/system/ 2.5.8.7 启动服务systemctl daemon-reload systemctl enable --now kube-scheduler systemctl status kube-scheduler -