1 部署CoreDNS

cat > coredns.yaml << "EOF"

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: coredns/coredns:1.11.1

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.96.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

EOF

kubectl apply -f coredns.yaml

# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-7cc8dd57d9-tf2m5 1/1 Running 0 4m7s

kube-system calico-node-llw5w 1/1 Running 0 4m7s

kube-system calico-node-mhh6g 1/1 Running 0 4m7s

kube-system calico-node-twj99 1/1 Running 0 4m7s

kube-system calico-node-zh6xl 1/1 Running 0 4m7s

kube-system coredns-675db8b7cc-ncnf6 1/1 Running 0 26s

2.5.11 部署应用验证

cat > nginx.yaml << "EOF"

---

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-web

spec:

replicas: 2

selector:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.19.6

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service-nodeport

spec:

ports:

- port: 80

targetPort: 80

nodePort: 30001

protocol: TCP

type: NodePort

selector:

name: nginx

EOF

kubectl apply -f nginx.yaml

[root@k8s-master01 k8s-work]# kubectl get pods -A -w -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-apiserver calico-apiserver-86cf84fd44-lgq4f 1/1 Running 0 7m31s 10.244.58.193 k8s-node02 <none> <none>

calico-apiserver calico-apiserver-86cf84fd44-r9z9x 1/1 Running 0 7m30s 10.244.195.1 k8s-master03 <none> <none>

calico-system calico-kube-controllers-5464c5f856-9wc27 1/1 Running 0 30m 10.88.0.2 k8s-master03 <none> <none>

calico-system calico-node-2rc2k 1/1 Running 0 30m 192.168.31.34 k8s-master01 <none> <none>

calico-system calico-node-jn49m 1/1 Running 0 30m 192.168.31.38 k8s-node02 <none> <none>

calico-system calico-node-qh9cn 1/1 Running 0 30m 192.168.31.37 k8s-node01 <none> <none>

calico-system calico-node-x8ws2 1/1 Running 0 30m 192.168.31.36 k8s-master03 <none> <none>

calico-system calico-node-zdnfb 1/1 Running 0 30m 192.168.31.35 k8s-master02 <none> <none>

calico-system calico-typha-56f47497f9-pf9qg 1/1 Running 0 30m 192.168.31.34 k8s-master01 <none> <none>

calico-system calico-typha-56f47497f9-wsbz9 1/1 Running 0 30m 192.168.31.35 k8s-master02 <none> <none>

calico-system calico-typha-56f47497f9-x48r5 1/1 Running 0 30m 192.168.31.38 k8s-node02 <none> <none>

calico-system csi-node-driver-7dz9r 2/2 Running 0 30m 10.244.58.194 k8s-node02 <none> <none>

calico-system csi-node-driver-g9wl9 2/2 Running 0 30m 10.244.195.2 k8s-master03 <none> <none>

calico-system csi-node-driver-hr7d8 2/2 Running 0 30m 10.244.32.129 k8s-master01 <none> <none>

calico-system csi-node-driver-lrqb9 2/2 Running 0 30m 10.244.122.129 k8s-master02 <none> <none>

calico-system csi-node-driver-z76d9 2/2 Running 0 30m 10.244.85.193 k8s-node01 <none> <none>

default nginx-web-2qzth 1/1 Running 0 46s 10.244.32.131 k8s-master01 <none> <none>

default nginx-web-v6srq 1/1 Running 0 46s 10.244.85.194 k8s-node01 <none> <none>

kube-system coredns-7dbfc4968f-lsrfl 1/1 Running 0 2m9s 10.244.32.130 k8s-master01 <none> <none>

kube-system coredns-7dbfc4968f-xsx78 1/1 Running 0 3m36s 10.244.122.130 k8s-master02 <none> <none>

tigera-operator tigera-operator-7f8cd97876-gn9fb 1/1 Running 0 56m 192.168.31.37 k8s-node01 <none> <none>

[root@k8s-master01 k8s-work]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-web-2qzth 1/1 Running 0 68s

pod/nginx-web-v6srq 1/1 Running 0 68s

NAME DESIRED CURRENT READY AGE

replicationcontroller/nginx-web 2 2 2 68s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d3h

service/nginx-service-nodeport NodePort 10.96.65.129 <none> 80:30001/TCP 68s

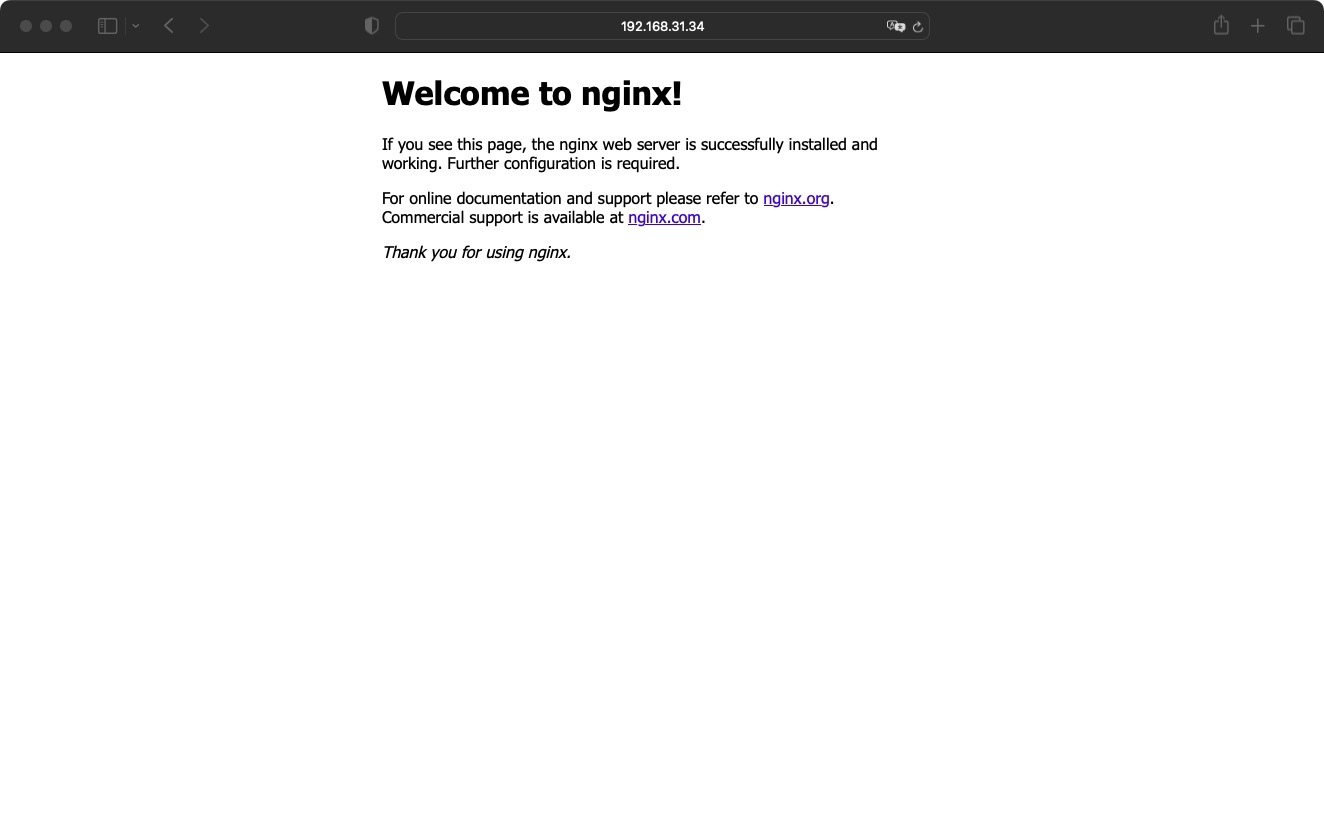

svc 验证

[root@k8s-master01 k8s-work]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d3h

nginx-service-nodeport NodePort 10.96.65.129 <none> 80:30001/TCP 2m5s

[root@k8s-master01 k8s-work]# curl 10.96.65.129

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

浏览器验证

评论